Projects

Robot-Assisted Drilling on Curved Surfaces with Haptic Guidance under Adaptive Admittance Control

Drilling a hole on a curved surface with a desired angle is prone to failure when done manually, due to

the difficulties in drill alignment and also inherent instabilities of the task, potentially causing injury and

fatigue to the workers. On the other hand, it can be impractical to fully automate such a task in real

manufacturing environments because the parts arriving at an assembly line can have various complex

shapes where drill point locations are not easily accessible, making automated path planning difficult.

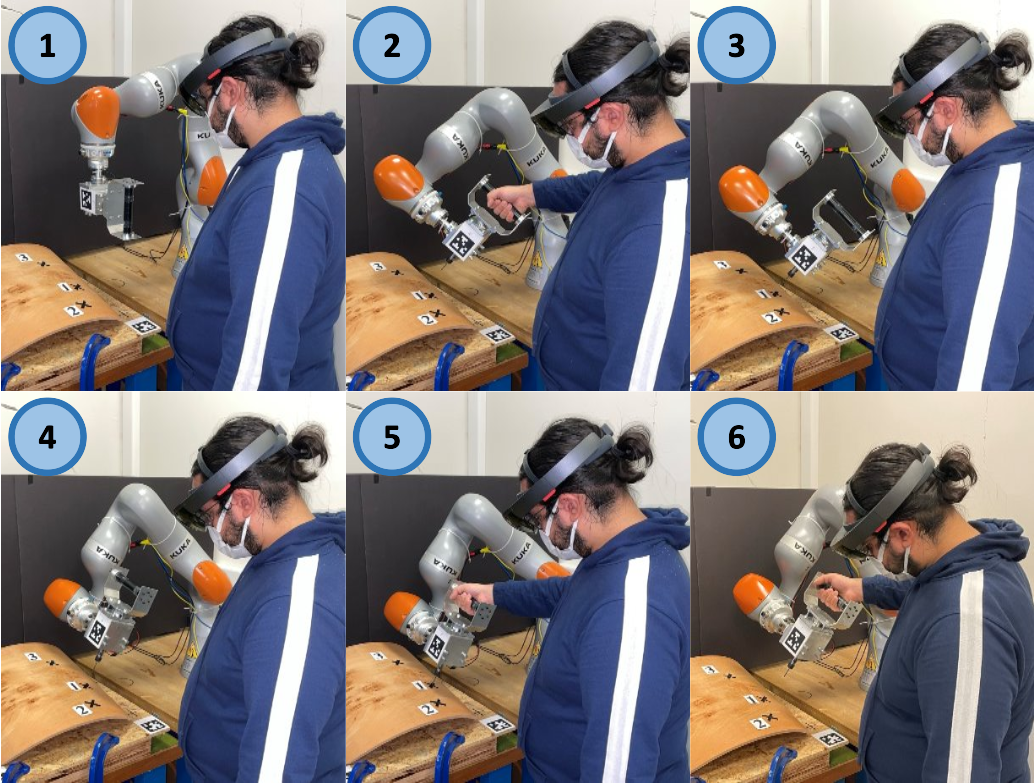

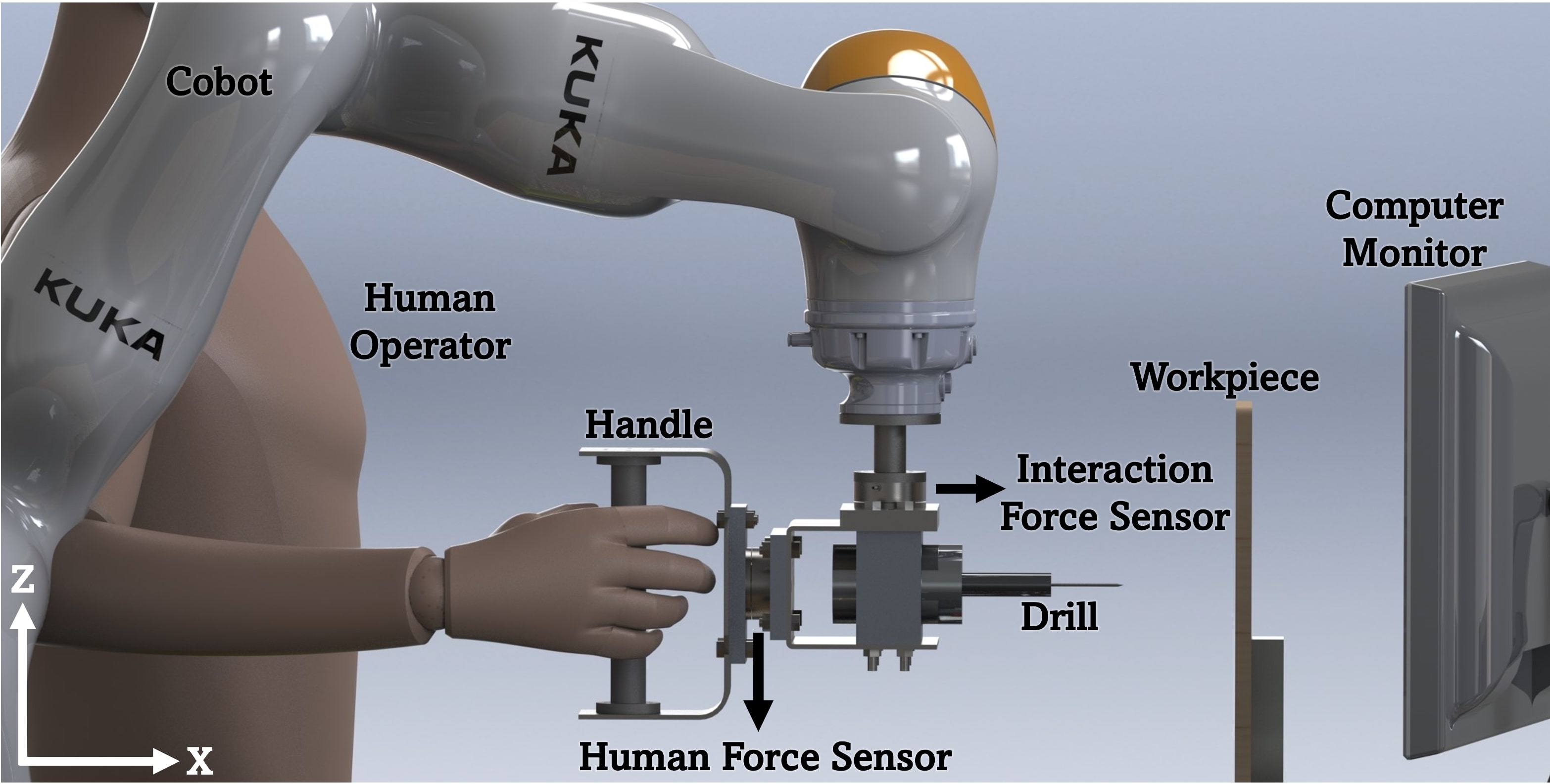

In this work, an adaptive admittance controller with 6 degrees of freedom is developed and deployed

on a KUKA LBR iiwa 7 cobot such that the operator is able to manipulate a drill mounted on the robot

with one hand comfortably and open holes on a curved surface with haptic guidance of the cobot and

visual guidance provided through an AR interface.

Drilling a hole on a curved surface with a desired angle is prone to failure when done manually, due to

the difficulties in drill alignment and also inherent instabilities of the task, potentially causing injury and

fatigue to the workers. On the other hand, it can be impractical to fully automate such a task in real

manufacturing environments because the parts arriving at an assembly line can have various complex

shapes where drill point locations are not easily accessible, making automated path planning difficult.

In this work, an adaptive admittance controller with 6 degrees of freedom is developed and deployed

on a KUKA LBR iiwa 7 cobot such that the operator is able to manipulate a drill mounted on the robot

with one hand comfortably and open holes on a curved surface with haptic guidance of the cobot and

visual guidance provided through an AR interface.

-

My Contributions:

- Developed an admittance controller in

C++usingFast Robot Interface (FRI)ofKUKA iiwa co-bot. - Developed an mixed reality (MR) interface in

C#, working withMicrosoft HoloLens, usingUnity Game EngineandMicrosoft's Mixed Reality Toolkit (MRTK). - Implemented a

Kalman Filterto track 6-DoF movements of HoloLens and the workpiece being drilled via visual fiducial markers (AprilTag) attached to them. - Constructed a 3D mesh model of the workpiece using a

depth camera (Microsoft Kinect). - Established a

TCPcommunication between the robot and HoloLens.

A. Madani, P. P. Niaz, B. Guler, Y. Aydin, and C. Basdogan, “Robot-Assisted Drilling on Curved Surfaces with Haptic Guidance under Adaptive Admittance Control” IROS, 2022. Available:

An adaptive admittance controller for collaborative drilling with a robot based on subtask classification via deep learning

In this paper, we propose a supervised learning approach based on an Artificial Neural Network (ANN) model for real-time classification of subtasks in a physical human-robot interaction (pHRI) task involving contact with a stiff environment. In this regard, we consider three subtasks for a given pHRI task: Idle, Driving, and Contact. Based on this classification, the parameters of an admittance controller that regulates the interaction between human and robot are adjusted adaptively in real-time to make the robot more transparent to the operator (i.e., less resistant) during the Driving phase and more stable during the Contact phase. The Idle phase is primarily used to detect the initiation of the task.

In this paper, we propose a supervised learning approach based on an Artificial Neural Network (ANN) model for real-time classification of subtasks in a physical human-robot interaction (pHRI) task involving contact with a stiff environment. In this regard, we consider three subtasks for a given pHRI task: Idle, Driving, and Contact. Based on this classification, the parameters of an admittance controller that regulates the interaction between human and robot are adjusted adaptively in real-time to make the robot more transparent to the operator (i.e., less resistant) during the Driving phase and more stable during the Contact phase. The Idle phase is primarily used to detect the initiation of the task.

-

My Contributions:

- Developed a control architecture for regulating the physical interactions between human and a robot (pHRI) via an admittance controller implemented on

KUKA iiwa co-bot. - Performed a linear time-varying stability analysis for the pHRI system.

- Developed a

deep learning (DL)model to classify the subtasks of a task using theTensorflowframework inPython. - Developed methods to adapt the parameters of the admittance controller based on the outcome of the DL model.

- Developed a graphical user interface (GUI) in

C#for reading and recording data fromATI force sensors. - Designed pHRI experiments, collected data, and performed statistical analysis on the data.

B. Guler, P. P. Niaz, A. Madani, Y. Aydin, and C. Basdogan, “An adaptive admittance controller for collaborative drilling with a robot based on subtask classification via deep learning” Mechatronics, vol. 86, p. 102851, 2022. Available:

Autonomous Manipulation and SLAM with the Hybrid Mobile Manipulator

This study demonstrates the soft robotic as a part of the mobile manipulator that executes the defined tasks, which are listed below:

This study demonstrates the soft robotic as a part of the mobile manipulator that executes the defined tasks, which are listed below:

- Autonomous Navigation using SLAM.

- The visual recognition of the marked boxed with ARtags.

- Pick&Place manipulation of the objects located on top of the marked boxes.

-

My Contributions:

- Designed a serial robotic manipulator using

Siemens NXand manufactured it using 3-D printing technology. - Designed a soft gripper using

PneuNets, and analyzed by utilizing finite element methods usingAbaqus. - Integrated the robotic manipulator with a mobile platform.

- Developed the

ROS packagesfor the manipulator inPython. - Tracked the positions of the marked boxes using visual fiducial markers (ARTag) attached to the box using

OpenCV. - Implemented a

SLAMalgorithm fromROS Navigation Stackto estimate the pose of the mobile platform usingLIDARandIMU.

Autonomous Visual Fiducial Markers Recognition and Tracking

Approaching the Visual Fiducial Marker

Inverse Kinematics

[Active Project] Reinforcement Learning to Optimize Task Performance in Physical Human-Robot Co-Manipulation

In industry, complex tasks encourage human operators to work together with robots, and carrying heavy objects is one of them.

Using the human's dexterity and intelligence and the robot's precision, we can drive and park heavy objects where the human operator wants without the programming in advance.

This study's initial aim is to increase efficiency by understanding human motion intention.

However, the human force's response is observed by delay due to the object's weight.Therefore, estimated human intention can help make decisions on amplifying or attenuating the human force.

Constantly scaling in one way is not efficient for human effort because of the different phases of the co-manipulation task. Amplification is preferable during driving when attenuation becomes more of an issue for parking.

The deep learning model provides an adaptive force scaling factor by referring to human motion intention without guaranteeing optimal human effort and comfort.

The model-free optimal control algorithm, deep reinforcement learning, adjusts the existing adaptive force scaling factor to decrease human effort and increase comfort.

-

My Contributions:

- Developed an experimental set-up for human-robot co-manipulation of a heavy object.

- Developed a

deep learning (DL)model usingTensorflowinPythonto estimate human intention to accelerate/decelerate the manipulated object. - Developed a

deep reinforcement learning (DRL)model, working with the DL model, to optimize task performance based on minimum jerk and human effort. - Designed pHRI experiments, collected data, and performed statistical analysis on the data.